In recent years, there has been an increase in news about car accidents involving elderly drivers. While they are encouraged to return their driver’s licenses, living without an alternative means of transportation can be difficult, especially for those living in rural areas or those with limited mobility. In this context, the concept of “MaaS (Mobility as a Service)”—which seamlessly connects various modes of transportation such as trains, buses, taxis, rental cars, and bike-sharing for route searches, reservations, and payments—has been gaining attention. It is essential to maintain bus services and improve their convenience, especially for the last mile from the station to home. However, how can we provide information to generations that are not adept at using smartphones? We asked Associate Professor Hidekazu Suzuki for his insights.

The installation of smart bus stops fully utilizing IoT and ICT technologies

MaaS has become a frequently discussed topic in Japan over the past few years. Currently, payment and reservations for trains and buses are handled separately by each operator, requiring the use of different applications. However, with the implementation of MaaS, it would be possible to perform transfers, payments, and reservations across multiple operators through a single service, making it very convenient for users. Nevertheless, unifying these services is challenging because each company creates schedules and location information in different formats, and bus operators, in particular, often need more organized information.

MaaS has become a frequently discussed topic in Japan over the past few years. Currently, payment and reservations for trains and buses are handled separately by each operator, requiring the use of different applications. However, with the implementation of MaaS, it would be possible to perform transfers, payments, and reservations across multiple operators through a single service, making it very convenient for users. Nevertheless, unifying these services is challenging because each company creates schedules and location information in different formats, and bus operators, in particular, often need more organized information.

To address this issue, an initiative to introduce the world’s standard bus information format “GTFS” in Japan began around 2016. Private companies operating bus services nationwide, municipalities and university researchers are working together to develop the Japanese version of GTFS (GTFS-JP) data. I am also collaborating with local governments in Aichi Prefecture to work on data organization.

Now, it has become easier to search for bus routes and transfer information using Google Maps and other services. This improvement is due to the organization of GTFS-JP data and its integration into Google’s system. Real-time information is also available, allowing users to know how many minutes a bus will be delayed and the timing of the next connecting train.

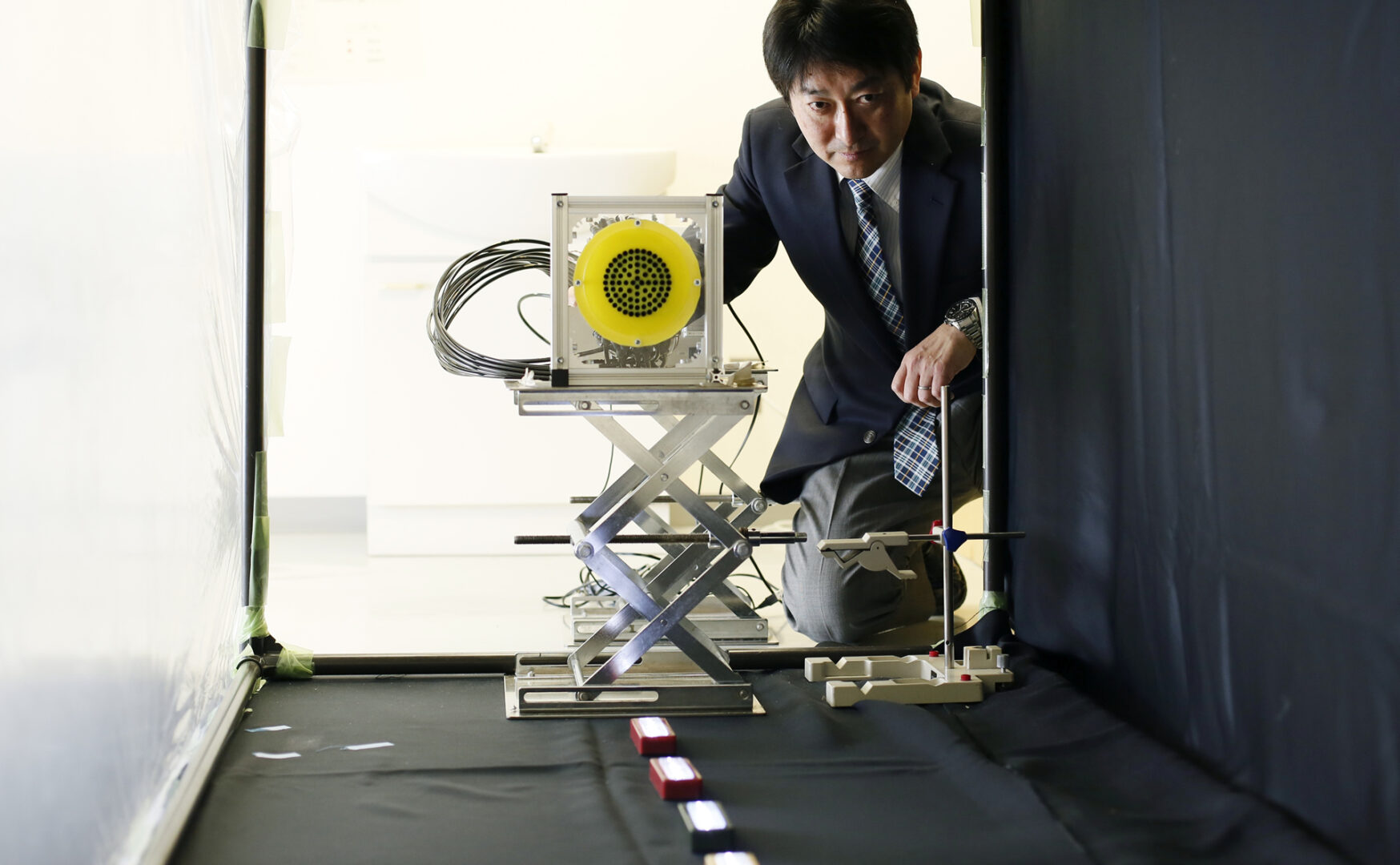

Bus operators are developing bus location systems to monitor their operations. At major terminal bus stops, displays show information such as the next arriving bus and any delays. However, most bus stops only have timetables posted. Therefore, in my laboratory, we are working on the research and development of smart bus stops that fully utilize the IoT (Internet of Things) and ICT (Information and Communication Technology) in collaboration with the city of Nisshin, which operates that community’s “Kururin Bus.”

For bus stops located in rural or suburban areas, one challenge is the difficulty of installing power supply systems to display real-time information. To address this, we developed bus stops using energy-efficient electronic paper powered solely by solar energy. The display shows announcements about bus operations from the local government and disaster information, making it useful not only as a bus stop but also as an information dissemination point in the community. Despite the widespread use of smartphones, many elderly people still cannot use them. Especially during disasters, it’s crucial to be able to receive real-time information, and we hope to widely implement these smart bus stops to achieve that.

Feeling Connected to Society Through Research

The bus initiatives in Nisshin City stemmed from discussions with Professor Yukimasa Matsumoto from the Department of Civil Engineering and Architecture, starting about ten years ago. The actual systems are developed by students, allowing them to experience firsthand how their research and activities directly impact society.

The bus initiatives in Nisshin City stemmed from discussions with Professor Yukimasa Matsumoto from the Department of Civil Engineering and Architecture, starting about ten years ago. The actual systems are developed by students, allowing them to experience firsthand how their research and activities directly impact society.

My expertise lies in computer networks and ubiquitous computing. I’ve always been interested in using this technology and knowledge to solve everyday problems. The development of smart bus stops and bus location systems originated from the idea of applying IoT technology. There are many societal issues that ICT technology can address, and recognizing these opportunities can lead to significant innovations. Staying informed about the world and collaborating with people from other fields to leverage each other’s knowledge and technology for co-creation is crucial. I encourage students to take an interest in various fields beyond information engineering, engage with the broader world, and participate in diverse activities.

Interview Date: April 14, 2021

In 1968, Ivan Sutherland in the United States developed the world’s first head-mounted display. Since that invention, various research institutions have begun working on VR, and now VR, which presents audiovisual experiences, has transitioned into industrial fields. In the future, in addition to hardware, both software and content will become increasingly important. Moreover, research in VR focusing on two types of sensory perception—force feedback, which allows us to feel weight and pressure, and tactile feedback, which lets us perceive surface textures like smoothness or roughness—has been progressing from early on. However, even with these advancements, we still can’t fully replicate the feeling of being present in a location—the “atmosphere” of the place. It feels as if we are experiencing VR through a spacesuit. Therefore, I believe that scents will play a crucial role in making people feel truly present, and I am advancing research in VR that appeals to the sense of smell.

In 1968, Ivan Sutherland in the United States developed the world’s first head-mounted display. Since that invention, various research institutions have begun working on VR, and now VR, which presents audiovisual experiences, has transitioned into industrial fields. In the future, in addition to hardware, both software and content will become increasingly important. Moreover, research in VR focusing on two types of sensory perception—force feedback, which allows us to feel weight and pressure, and tactile feedback, which lets us perceive surface textures like smoothness or roughness—has been progressing from early on. However, even with these advancements, we still can’t fully replicate the feeling of being present in a location—the “atmosphere” of the place. It feels as if we are experiencing VR through a spacesuit. Therefore, I believe that scents will play a crucial role in making people feel truly present, and I am advancing research in VR that appeals to the sense of smell. I believe there are various ways to utilize VR with scents. For example, it could be used as an advertising tool. Think of the enticing aroma wafting from an eel restaurant, which acts as a form of advertisement by drawing in passersby with the smell of grilled eel. We could do something similar on an individual basis. In shopping malls, sensors could identify the attributes of people passing by and subtly introduce corresponding scents. This kind of application might not be too far off in the future.

I believe there are various ways to utilize VR with scents. For example, it could be used as an advertising tool. Think of the enticing aroma wafting from an eel restaurant, which acts as a form of advertisement by drawing in passersby with the smell of grilled eel. We could do something similar on an individual basis. In shopping malls, sensors could identify the attributes of people passing by and subtly introduce corresponding scents. This kind of application might not be too far off in the future. Recommendation systems are now ubiquitous in music and for books, clothing, real estate properties, and even friends, suggesting personalized options for us in various domains. The mechanism behind these systems is relatively straightforward, relying on machine learning techniques to predict whether a user is likely to purchase an item based on their purchase history, the content of purchased items, and demographic information such as age and gender. Two methods commonly used in these predictions are “item-based collaborative filtering” and “user-based collaborative filtering.” Item-based collaborative filtering identifies and recommends items similar to the ones currently being viewed by the user, and this approach is reportedly employed by some of the world’s leading e-commerce companies. In contrast, user-based collaborative filtering identifies users in the database who have similar purchase histories or attributes to the current user and recommends products those similar users have purchased. While both systems are relatively straightforward, they require vast data processing.

Recommendation systems are now ubiquitous in music and for books, clothing, real estate properties, and even friends, suggesting personalized options for us in various domains. The mechanism behind these systems is relatively straightforward, relying on machine learning techniques to predict whether a user is likely to purchase an item based on their purchase history, the content of purchased items, and demographic information such as age and gender. Two methods commonly used in these predictions are “item-based collaborative filtering” and “user-based collaborative filtering.” Item-based collaborative filtering identifies and recommends items similar to the ones currently being viewed by the user, and this approach is reportedly employed by some of the world’s leading e-commerce companies. In contrast, user-based collaborative filtering identifies users in the database who have similar purchase histories or attributes to the current user and recommends products those similar users have purchased. While both systems are relatively straightforward, they require vast data processing. Data analysis can be applied in various fields. For example, some students use statistical methods to analyze sports data under the keyword “data analysis.” In contrast, others employ a method called “cluster analysis,” which finds similar data groups within large datasets to analyze shoppers’ behavior and contribute to revitalizing shopping districts.

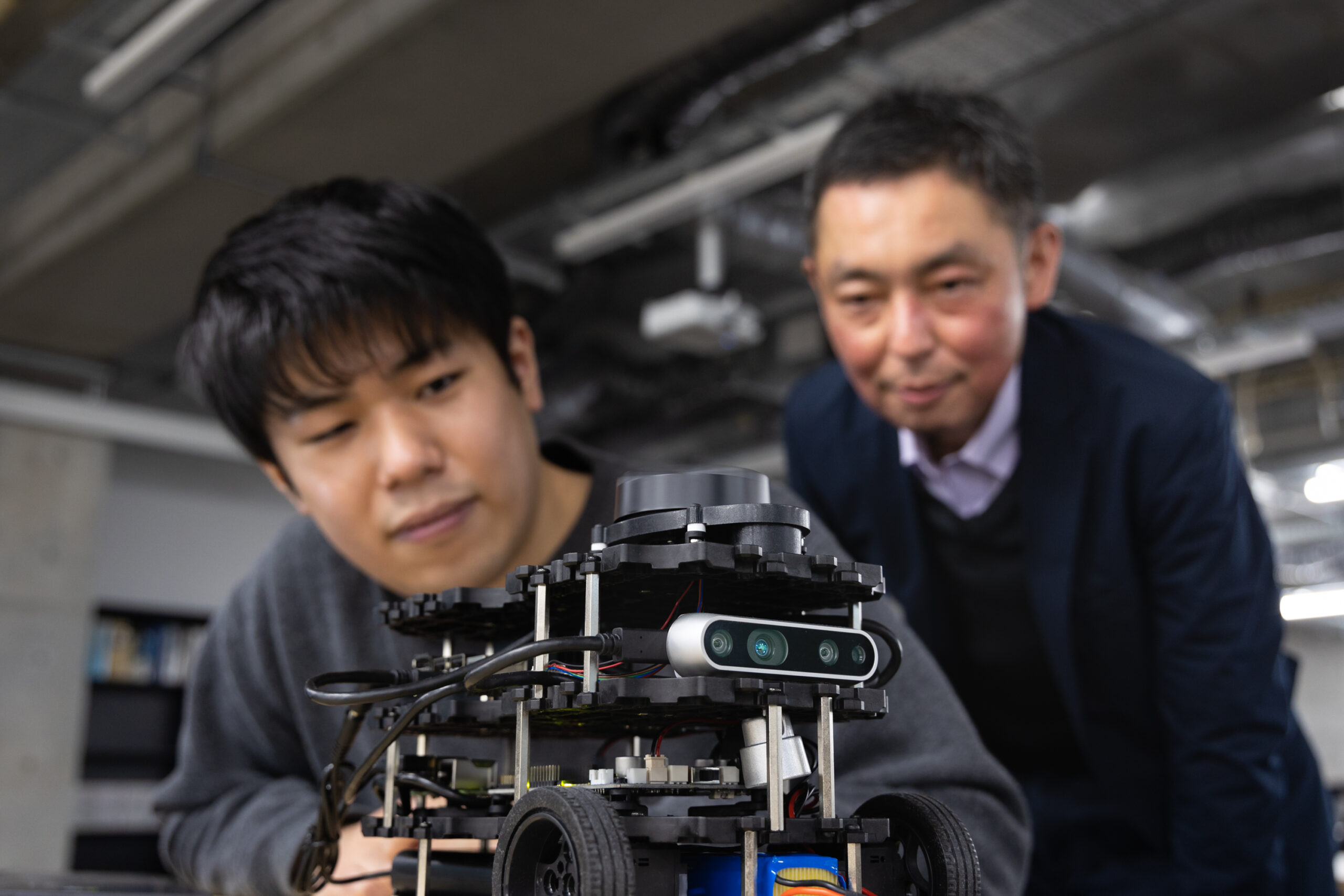

Data analysis can be applied in various fields. For example, some students use statistical methods to analyze sports data under the keyword “data analysis.” In contrast, others employ a method called “cluster analysis,” which finds similar data groups within large datasets to analyze shoppers’ behavior and contribute to revitalizing shopping districts. Currently, there are four major issues concerning AI and security. One is the ability to “deceive AI.” For example, the cameras installed in self-driving cars are supposed to recognize stop signs and bring the vehicles to a halt. However, it has been found that a slightly altered stop sign can look normal to the human eye but unrecognizable to a self-driving car, thus risking collisions with oncoming traffic. Moreover, self-driving cars use “distance sensors” to measure distances between vehicles. These sensors calculate distance by emitting laser beams and measuring the time it takes for them to bounce back from various objects. If someone introduces alternate beams, this can affect the measuring of distance. Tampering with external data required for AI’s decision making has become a major issue.

Currently, there are four major issues concerning AI and security. One is the ability to “deceive AI.” For example, the cameras installed in self-driving cars are supposed to recognize stop signs and bring the vehicles to a halt. However, it has been found that a slightly altered stop sign can look normal to the human eye but unrecognizable to a self-driving car, thus risking collisions with oncoming traffic. Moreover, self-driving cars use “distance sensors” to measure distances between vehicles. These sensors calculate distance by emitting laser beams and measuring the time it takes for them to bounce back from various objects. If someone introduces alternate beams, this can affect the measuring of distance. Tampering with external data required for AI’s decision making has become a major issue. In the future, various types of AI will be used in numerous fields and involve combinations of technologies, such as “AI × 〇〇 × □□.” Drawing on my expertise in hardware security, I am currently researching how to protect AI from malicious attackers by integrating “AI × security” and “AI × security × hardware.” Although this field is still in its early stages, I believe it is crucial for AI’s real-world applications.

In the future, various types of AI will be used in numerous fields and involve combinations of technologies, such as “AI × 〇〇 × □□.” Drawing on my expertise in hardware security, I am currently researching how to protect AI from malicious attackers by integrating “AI × security” and “AI × security × hardware.” Although this field is still in its early stages, I believe it is crucial for AI’s real-world applications.