Four Security Challenges Related to AI

Currently, there are four major issues concerning AI and security. One is the ability to “deceive AI.” For example, the cameras installed in self-driving cars are supposed to recognize stop signs and bring the vehicles to a halt. However, it has been found that a slightly altered stop sign can look normal to the human eye but unrecognizable to a self-driving car, thus risking collisions with oncoming traffic. Moreover, self-driving cars use “distance sensors” to measure distances between vehicles. These sensors calculate distance by emitting laser beams and measuring the time it takes for them to bounce back from various objects. If someone introduces alternate beams, this can affect the measuring of distance. Tampering with external data required for AI’s decision making has become a major issue.

Currently, there are four major issues concerning AI and security. One is the ability to “deceive AI.” For example, the cameras installed in self-driving cars are supposed to recognize stop signs and bring the vehicles to a halt. However, it has been found that a slightly altered stop sign can look normal to the human eye but unrecognizable to a self-driving car, thus risking collisions with oncoming traffic. Moreover, self-driving cars use “distance sensors” to measure distances between vehicles. These sensors calculate distance by emitting laser beams and measuring the time it takes for them to bounce back from various objects. If someone introduces alternate beams, this can affect the measuring of distance. Tampering with external data required for AI’s decision making has become a major issue.

The second issue is protecting AI from side-channel attacks. These involve the use of such physical information as power consumption, electromagnetic radiation, and processing time to infer internal data and cryptographic keys. To prevent side-channel attacks, it is necessary to decouple this physical information from AI’s internal data.

The third issue is protecting AI’s training data. It is said that one can infer the data that trained an AI by analyzing its judgments. Since AI is used in the medical field, where it handles patients’ medical records and other highly sensitive data, there is the risk of a data breach of personal and private information. Therefore, it is crucial to ensure that AI’s training data cannot be inferred.

The fourth issue is preventing the contamination of training data. Using a method called “poisoning,” a hacker can deliberately corrupt training data to make AI produce incorrect judgments: for instance, by recognizing “A” instead of “B.” Numerous instances of “poisoning” have been reported.

Protecting AI from malicious attackers.

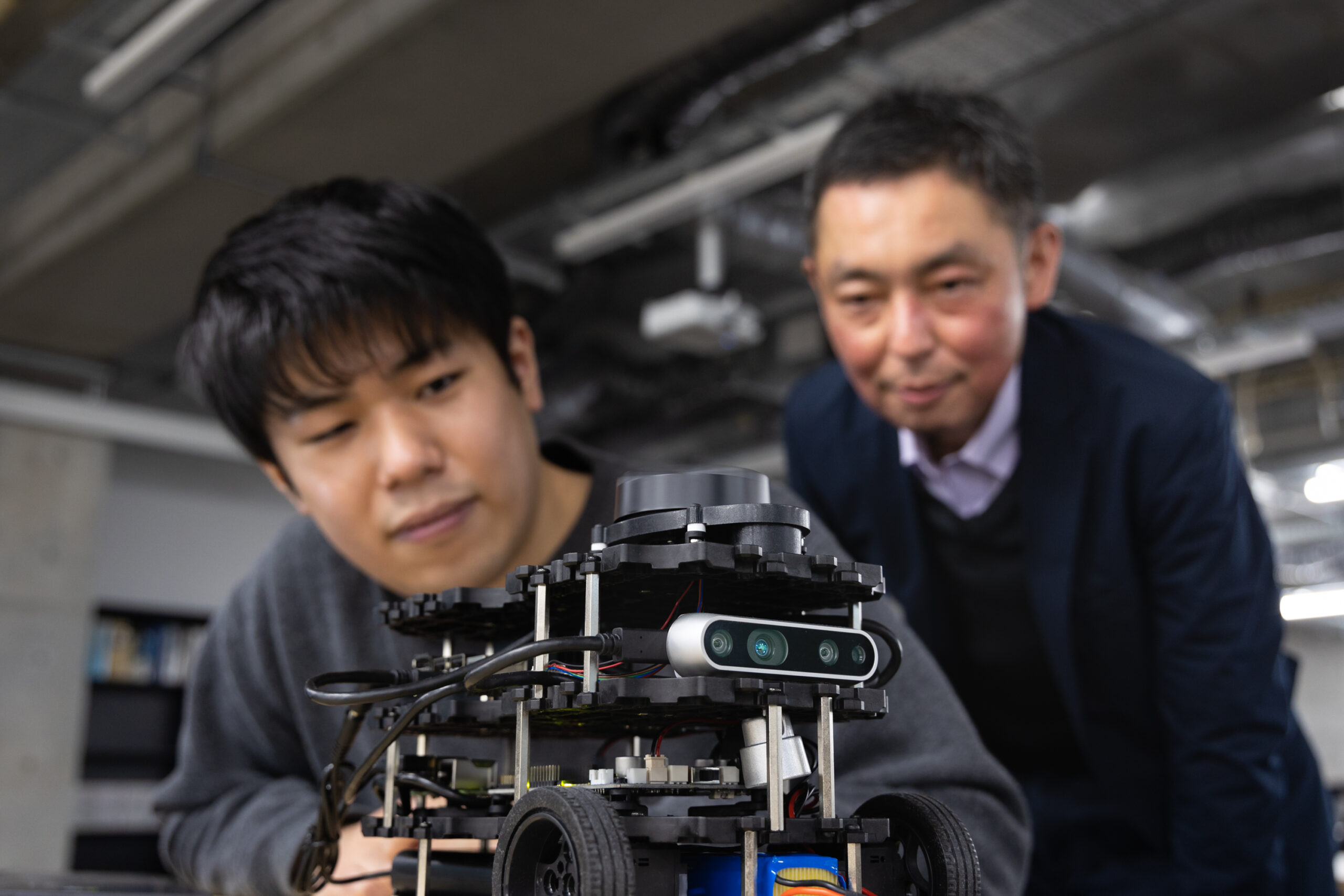

In the future, various types of AI will be used in numerous fields and involve combinations of technologies, such as “AI × 〇〇 × □□.” Drawing on my expertise in hardware security, I am currently researching how to protect AI from malicious attackers by integrating “AI × security” and “AI × security × hardware.” Although this field is still in its early stages, I believe it is crucial for AI’s real-world applications.

In the future, various types of AI will be used in numerous fields and involve combinations of technologies, such as “AI × 〇〇 × □□.” Drawing on my expertise in hardware security, I am currently researching how to protect AI from malicious attackers by integrating “AI × security” and “AI × security × hardware.” Although this field is still in its early stages, I believe it is crucial for AI’s real-world applications.

As various jobs shift to AI, we must consider its trustworthiness. For instance, AI used in autonomous driving—and in facial recognition systems for detecting suspicious individuals—directly impacts human lives. Should problems arise, there might be social unrest. Moreover, hackers could extract valuable information without being detected.

Hackers exploit every possible angle to target the most vulnerable points. As long as there are ruleless and hostile actors, security challenges remain. Still, I believe it is the mission of our university to think five-to-ten years ahead to ensure safety and security.

Interview Date: January 22, 2021